AI Data Breaches Affect 1 in 5 UK Companies: Insider Leaks Highlight Internal Security Threats

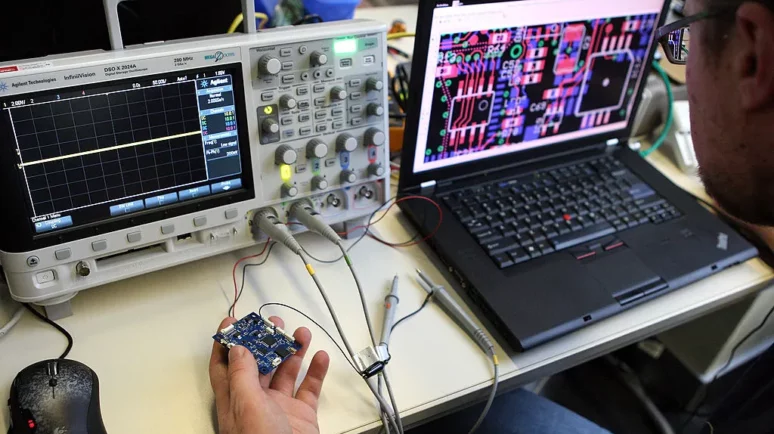

Data leaks are an inherent risk of cloud-based AI platforms. Photo by Joe Zlomek on Unsplash.

Key Takeaways

- One in 5 companies in the UK have experienced data breaches from staff using AI chatbots.

- The risk of leaking sensitive information has prompted many firms to ban employees from using chatbots.

- However, careless chatbot usage isn’t the only threat posed by company insiders.

Although most people think of data breaches as resulting from the efforts of hackers or espionage, these days, organizations are increasingly likely to leak sensitive information via cloud-based AI tools like ChatGPT.

A recent survey of businesses in the UK found that 20% had experienced data breaches resulting from employees’ use of AI platforms. As their use becomes more commonplace, the inherently leaky nature of such tools poses a growing risk to organizations’ information security.

Chatbots Behind Data Leakage

Because chatbots typically run on remote servers, every time they interact with sensitive data there is a risk of it being leaked.

Although OpenAI and its peers claim user data is protected, rogue chatbots have been known to disclose private information to outside parties, either by accident or as the result of manipulation.

Last year, Amazon whistleblowers reported that the company’s Q chatbot had been observed leaking confidential data, including data center locations and details of unreleased features.

Fearing they could reveal trade secrets, companies like Samsung have banned employees from using chatbots on their work devices. Similar restrictions have been put in place by a string of major banks, which handle sensitive personal and commercial information.

The risk of AI data leakage isn’t just a concern for the private sector either.

In March, the House of Representatives Office of Cybersecurity banned staffers from using Microsoft Copilot on their official devices. While the government isn’t against staff using chatbots per se, it found that Copilot risked transferring data to unauthorized cloud services in violation of House cybersecurity policy.

An Emerging Insider Threat

What’s interesting about the emerging AI data risk is that the threat comes from inside organizations.

A recent survey by Riversafe found that 75% of companies in the UK believe insider threats pose a greater risk to their organization than external ones.

For many firms, banning chatbots is unnecessarily extreme and would mean missing out on the productivity boost they offer.

Instead, companies are implementing new protocols to mitigate the risk of chatbot data breaches.

Security experts recommend not uploading personal data or verification credentials to remotely hosted AI platforms. Many chatbots can also be configured to delete conversation histories and minimize the amount of information they save.

But even AI developers themselves aren’t immune to insider threats.

AI Developers Ramp up Internal Security

Last year, Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI committed to investing in cybersecurity and “insider threat safeguards” as part of an agreement with the White House.

Building on that agreement, OpenAI recently moved to hire an insider risk investigator to deal with internal security threats.

In OpenAI’s case, the concern isn’t that hapless employees could inadvertently leak sensitive information through their use of chatbots. If anything, the company is more concerned about corporate espionage.

Rival developers have been known to employ underhanded tactics to get their hands on the latest AI research. In March, an ex-Google engineer was arrested for allegedly stealing commercial secrets while working for 2 Chinese AI competitors.

Shortage of Cybersecurity Experts

From irresponsible chatbot usage to corporate espionage, internal cybersecurity is a major business concern. Yet many organizations don’t have the necessary cybersecurity personnel to defend against internal threats.

The Riversafe survey discovered that 83% of respondents felt their organization suffered from a cyber skills gap. This shortfall extends to the public sector too, with governments around the world looking to hire more cybersecurity experts.

However, the reality is that internal security shortcomings are responsible for far more data breaches than hackers. Leaking sensitive information through chatbots might seem like a harmless mistake. And many times it will be. But firms like Samsung, Citibank and Morgan Stanley have decided that it isn’t worth the risk.