Microsoft and OpenAI Launch $2M Fund to Combat Deceptive AI Content and Boost AI Literacy

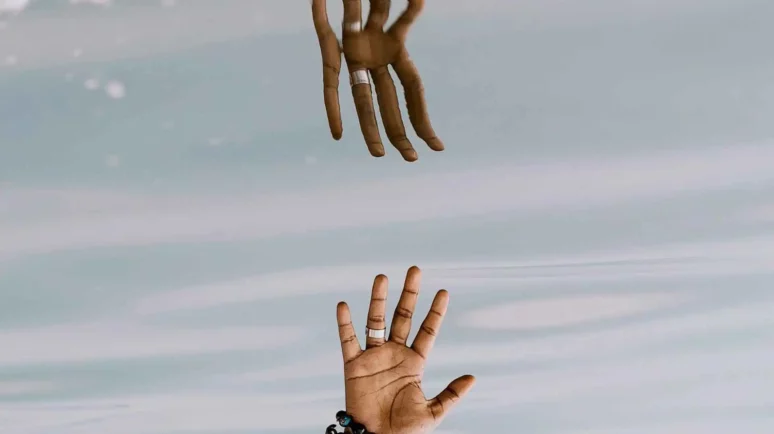

Older people are especially vulnerable to AI deception. Photo by Beth Macdonald on Unsplash.

Key Takeaways

- Microsoft and OpenAI have launched a new Fund to boost AI education.

- The initiative provides grants to help promote AI education and awareness.

- Recipients include a project aimed at educating older people about the risks of AI.

Microsoft and OpenAI have launched a $2 million “Societal Resilience Fund” to help finance AI education and awareness initiatives.

With a focus on older people and vulnerable communities, the move recognizes rising concerns about the capacity of generative AI to deceive people.

Artificial Intelligence and Deception

While deceptive media predates modern generative AI, the ease with which people can create convincing deepfakes without any specialist skills or knowledge has significantly amplified the problem.

Research has demonstrated that older people are generally worse at identifying AI-generated voice and images . And many younger social media users will be familiar with older friends or relatives who appear especially vulnerable to deception, even if it appears harmless on the surface.

Boomers that fall for AI images

byu/GantzDuck inBoomersBeingFools

Recipients of grants from the Societal Resilience Fund include the Older Adults Technology Services (OATS) from AARP, which will use the money to develop a training program for American adults aged 50 and over.

“As AI tools become part of everyday life, it is essential that older adults learn more about the risks and opportunities that are emerging,” commented OATS Executive Director Tom Kamber.

“This vital project [will] make sure that older adults 50+ have access to training, information, and support—both to enhance their lives with new technology, and to protect themselves against its misuse,” he added.

Promoting Responsible AI

Of course, it isn’t just older people who can be duped by AI. The most realistic deepfakes can now appear convincing even to the most technologically adept audiences.

To that end, the Partnership on AI has also received a grant from Microsoft and OpenAI to help promote its Synthetic Media Framework .

Offering a set of guidelines for AI developers, content creators and distributors, the framework outlines best practices to help prevent the technology from being abused.

While such initiatives are important, most technology providers are at least nominally committed to the basic principles of safety and transparency. The problem is that existing safeguards often fail.

What can make a difference is better technical standards.

Content Provenance Standards

Another recipient of the new funding is the Coalition for Content Provenance and Authenticity (C2PA), which was established to create and promote technical standards for certifying the source and history of digital media.

While C2PA standards won’t make misleading content disappear, they should make it harder for malicious actors to bypass systems that label and identify AI-generated content.

The organization’s grant will be used to help spread awareness of content provenance standards and best practices.